Introduction

I recently completed a performance review for a client’s ASP.NET MVC web app that was running slowly. Thankfully, their application was using the LINQ-to-SQL version of PLINQO, so it was easy to identify and resolve the data access bottlenecks. Below, I have explained some of the technologies and techniques that I used to solve their server-side performance issues.

Why I Still Use LINQ-to-SQL

You may be wondering why anyone would continue to use LINQ-to-SQL (L2S) when Microsoft is pushing Entity Framework (EF) on .NET developers. There are several reasons:

- Lightweight – Compared to EF, L2S is much lighter. There is less code and less overhead.

- Simplicity – I don’t mind complexity as long as it serves a purpose, but the bloat of EF is usually not necessary.

- Performance – Performance tests have consistently shown faster application performance with L2S.

- Cleaner SQL – The actual T-SQL generated by L2S tends to be simpler and cleaner than that of EF.

- Enhancements – PLINQO adds a ton of features to L2S including caching, future queries, batched queries, bulk update/delete, auditing, a business rule engine and more. Many of these features are not available with Entity Framework out-of-the-box.

Didn’t Microsoft say LINQ-to-SQL was dead?

Contrary to popular belief, Microsoft has never said that LINQ to SQL is dead, and improvements are being made according to Damien Guard’s LINQ to SQL 4.0 feature list.

Microsoft also released the following statement about their plan to continue support for LINQ to SQL:

Question #3: Where does Microsoft stand on LINQ to SQL?

Answer: We would like to be very transparent with our customers about our intentions for future innovation with respect to LINQ to SQL and the Entity Framework.

In .NET 4.0, we continue to invest in both technologies. Within LINQ to SQL, we made a number of performance and usability enhancements, as well as updates to the class designer and code generation. Within the Entity Framework, we listened to a great deal to customer feedback and responded with significant investments including better foreign key support, T4 code generation, and POCO support.

Moving forward, Microsoft is committing to supporting both technologies as important parts of the .NET Framework, adding new features that meet customer requirements. We do, however, expect that the bulk of our overall investment will be in the Entity Framework, as this framework is built around the Entity Data Model (EDM). EDM represents a key strategic direction for Microsoft that spans many of our products, including SQL Server, .NET, and Visual Studio. EDM-based tools, languages and frameworks are important technologies that enable our customers and partners to increase productivity across the development lifecycle and enable better integration across applications and data sources.

Reasons to Use Entity Framework

The main reasons to use EF are:

- Support for Other Databases – It can be used with databases other than Microsoft SQL Server, including MySQL and Oracle. Of course, if you’re programming an application in .NET, you’re probably using MSSQL anyway.

- POCO Support – This stands for “Plain Old Code Objects” or “Plain Old C# Objects.” The gist is that you can take a code-first approach to designing your data entities. This is opposed to the database-first approach used by many ORMs.

- Abstraction – EF lets you add greater abstraction to your data objects so they aren’t as tightly coupled with your database schema. With L2S, all of your entities are locked into the Active Record Pattern where objects and properties are mapped directly to database tables and columns.

In my opinion, those arguments aren’t good enough to outweigh the benefits of the L2S version of PLINQO in most business scenarios. If you really need to use EF, you should check out the Entity Framework version of PLINQO. It doesn’t have all of the features of the L2S version, but it provides some of the same benefits.

What About NHibernate?

There is also an NHibernate version of PLINQO. NHibernate is just as bloated as EF, but it is the most mature Object Relational Mapper (ORM) available. As far as I know, it has more features than any other ORM, and there are many extensions available, along with a ton of documentation and support forums. This is the only flavor of PLINQO that supports multiple database technologies including MSSQL, MySQL, Oracle, DB2 and others.

How to Identify Query Performance Issues

There are two common tools available to intercept the underlying SQL activity that is being executed by LINQ:

- SQL Server Profiler – This SQL client tool is included with Developer, Standard and Enterprise editions of SQL Server. It works best when using a local copy of the database. Tip – It helps to add a filter to the trace settings to only display activity from the SQL login used by the web application.

- LINQ to SQL Profiler – This is a code based logging tool. It is less convenient than SQL Profiler but it works well if you are connecting to a remote SQL Server. http://www.codesmithtools.com/product/frameworks/plinqo/tour/profiler

Eager Loading versus Lazy Loading

By default, LINQ-to-SQL uses “lazy loading” in database queries. This means that only the data model(s) specifically requested in the query will be returned. Related entities (objects or collections related by foreign key) are not immediately loaded. However, the related entities will be automatically loaded later if they are referenced. Essentially, only the primary object in the object graph will be hydrated during the initial query. Related objects are hydrated on-demand only if they are called later.

In general, lazy loading performs well because it limits the amount of data that is fetched from the database. But in some scenarios it introduces a large volume of redundant queries back to SQL Server. This often occurs when the application encounters a loop which references related entities. Following is an example of that scenario.

Lazy loading sample controller code (C#):

public ActionResult Index() {

MyDataContext db = new MyDataContext();

List<User> users = db.Users.ToList();

return View(users);

}

Lazy loading sample view code (Razor):

@model IEnumerable<User>

@foreach (var user in Model) {

<b>Username:</b> @user.Username <br />

<b>Roles:</b>

<ul>

@foreach (var role in user.RoleList) {

<li>@role.RoleName - @role.RoleType.RoleTypeName</li>

}

</ul>

}

With the above code, the List<User> object will initially be filled with data from the Users table. When the MVC Razor view is executed, the code will loop through each User to output the Username and the list of Roles. Lazy loading will automatically pull in the RoleList for each User (@user.RoleList.RoleName), and a separate SQL query will be executed for each User record. Also, another query for RoleType will be executed for every Role (@role.RoleType.RoleTypeName).

In our hypothetical example, let’s assume there are 100 Users in the database. Each User has 3 Roles attached (N:N relationship). Each Role has 1 RoleType attached (N:1 relationship). In this case, 401 total queries will be executed: 1 query returning 100 User records, then 100 queries fetching Role records (3 Roles per User), then 300 queries fetching RoleType records. L2S is not even smart enough to cache the RoleType records even though the same records will be requested many times. Granted, the lookup queries are simple and efficient (they fetch related data by primary key), but the large volume of round-trips to SQL are unnecessary.

Instead of relying on lazy loading to pull related entities, “eager loading” can be used to hydrate the object graph ahead of time. Eager loading can proactively fetch entities of any relationship type (N:1, 1:N, 1:1, or N:N). With L2S, eager loading is fully configurable so that developers can limit which relationships are loaded.

There are two methods of eager loading with L2S, both of which are part of Microsoft’s default implementation (i.e. these are not PLINQO-specific features). The first is to use a projection query to return results into a flattened data object called a “view model.” The second is to use DataLoadOptions to specify the relationship(s) to load.

View Model Approach

This approach to eager loading works well for many-to-one (N:1) and one-to-one (1:1) relationships but it does not handle many-to-many (N:N) relationships. However, N:1 is the most common type of lookup, so it works for the majority of scenarios.

The idea is to cast the result set into a “view model” data object which is a simple container for all of the required data output. This can be an anonymous type, but it is generally recommended that developers use a defined data type (see the PLINQO Future Queries section below for details).

Because our first example used a N:N relationship (Users to Roles), it cannot be improved using this methodology. However, it could be useful for loading other User relationships. Following is an example of eager loading data from a related User Profile entity (1:1) and a User Type entity (N:1).

View model sample controller code (C#):

public Class UserViewModel {

public string Username { get; set; }

public string UserTypeName { get; set; }

public string Email { get; set; }

public string TwitterHandle { get; set; }

}

public ActionResult Index() {

MyDataContext db = new MyDataContext();

List<UserViewModel> users = db.Users

.Select(u => new UserResult() {

Username = u.Username,

UserType = u.UserType.UserTypeName,

Email = u.UserProfile.Email,

TwitterHandle = u.UserProfile.TwitterHandle

}).ToList();

return View(users);

}

View model sample view code (Razor):

@model IEnumerable<UserViewModel>

@foreach (var user in Model) {

<b>Username:</b> @user.Username <br />

<b>User Type:</b> @user.UserTypeName <br />

<b>Email:</b> @user.Email <br />

<b>Twitter: </b> @user.TwitterHandle

}

With this controller code, the controller’s LINQ query loads all of the UserResult properties in one SQL query (the query performs the appropriate joins with the UserType and UserProfile tables). The actual SQL output performs inner or outer joins (depending on whether the foreign key column is nullable) to collect all of the data in one round-trip.

Another benefit to the view model approach is a reduction of the volume of data sent over the network. Ordinarily, LINQ pulls all columns from each database table, regardless of how many data columns are actually displayed later. When a simplified return type is used, you are explicitly specifying the columns that should be returned from SQL Server. This is beneficial if your database table contains a large number of columns, or it has columns with large data sizes like varchar(MAX) or other BLOBs.

DataLoadOptions Approach

An easier way to eager load data is to specify DataLoadOptions for the LINQ database context. Each relationship is added to the DataLoadOptions via the LoadWith method. Note: This can only be done before any queries are executed. The DataLoadOptions property may not be set or modified once any objects are attached to the context. See http://msdn.microsoft.com/en-us/library/system.data.linq.dataloadoptions%28v=vs.110%29.aspx for details.

All relationship types are allowed in DataLoadOptions, therefore it is the only way to eager load N:N relationships. Also, there is no limit to the number of association types that can be eager loaded. LINQ is also intelligent enough to ignore any associations that do not apply to the query. Therefore, a single “master” DataLoadOptions can be applied to multiple queries throughout the application.

Revisiting the first example (Users and Roles), here is a slightly modified version.

DataLoadOptions sample controller code (C#):

public ActionResult Index() {

MyDataContext db = new MyDataContext();

DataLoadOptions options = new DataLoadOptions();

options.LoadWith<User>(u => u.RoleList); //Load all Roles for each User

options.LoadWith<Role>(r => r.RoleType); //Load all RoleTypes for each Role

db.LoadOptions = options;

List<User> users = db.Users.ToList();

return View(users);

}

DataLoadOptions sample view code (Razor):

@model IEnumerable<User>

@foreach (var user in Model) {

<b>Username:</b> @user.Username <br />

<b>Roles:</b>

<ul>

@foreach (var role in user.RoleList) {

<li>@role.RoleName - @role.RoleType.RoleTypeName</li>

}

</ul>

}

Note that the Razor view code has not changed at all. The only difference is the controller code which adds DataLoadOptions to the LINQ data context. Although the view code is identical, this time only a single SQL query is executed, compared to 401 queries for the original controller code sample.

It is also worth noting that view model and DataLoadOptions approaches can be used together (i.e. they are not mutually exclusive). Any LoadWith relationships will be processed even when used inside a projection query.

View model with DataLoadOptions sample controller code (C#):

public Class UserViewModel {

public string Username { get; set; }

public string UserTypeName { get; set; }

public string Email { get; set; }

public string TwitterHandle { get; set; }

public IEnumerable<Role> RoleList { get; set; }

}

public ActionResult Index() {

MyDataContext db = new MyDataContext();

DataLoadOptions options = new DataLoadOptions();

options.LoadWith<User>(u => u.RoleList); //Load all Roles for each User

options.LoadWith<Role>(r => r.RoleType); //Load all RoleTypes for each Role

db.LoadOptions = options;

List<UserViewModel> users = db.Users

.Select(u => new UserResult() {

Username = u.Username,

UserType = u.UserType.UserTypeName,

Email = u.UserProfile.Email,

TwitterHandle = u.UserProfile.TwitterHandle,

RoleList = u.RoleList

}).ToList();

return View(users);

}

View model with DataLoadOptions sample view code (Razor):

@model IEnumerable<UserViewModel>

@foreach (var user in Model) {

<b>Username:</b> @user.Username <br />

<b>User Type:</b> @user.UserTypeName <br />

<b>Email:</b> @user.Email <br />

<b>Twitter: </b> @user.TwitterHandle <b>Roles:</b>

<ul>

@foreach (var role in user.RoleList) {

<li>@role.RoleName - @role.RoleType.RoleTypeName</li>

}

</ul>

}

This example is “the best of both worlds” because it pulls all required data in a single query, but it does not pull unnecessary columns from the User, UserType or UserProfile tables. It would still pull all columns from the Role and RoleList tables.

PLINQO Caching

PLINQO adds intelligent caching features to the LINQ data context. Caching is implemented as a LINQ query extension. The .FromCache() extension can be used for single return types or collections, and FromCacheFirstOrDefault() can be used for single return objects.

PLINQO caching example (C#):

//Returns one user, or null if not found

User someUser = db.Users.Where(u => u.Username = "administrator").FromCacheFirstOrDefault();

//Returns a collection of users, or an empty collection if not found

IEnumerable<User> adminUsers = db.Users.Where(u => u.Username.Contains("admin")).FromCache();

Note: These query extension methods change the return type for collections. Normally LINQ returns an IQueryable<T>. The cache extension methods return IEnumerable<T> instead. However, it is still possible to convert the output .ToList() or .ToArray().

Converting cached collections sample (C#):

//Convert IEnumerable<User> to list List<User> userList = db.Users.FromCache().ToList(); //Convert IEnumerable<User> to array User[] userArray = db.Users.FromCache().ToArray();

The caching in PLINQO also allows developers to specify a cache duration. This can be passed as an integer (the # of seconds to retain cache), or a string can be passed which refers to a caching profile from the web.config or app.config.

Cache duration sample (C#):

//Cache for 5 minutes (300 seconds)

IEnumerable<User> users = db.Users.FromCache(300);

//Cache according to the "Short" profile in web.config

IEnumerable<Role> roles = db.Roles.FromCache("Short");

The “Short” value above refers to a cache profile name in the web.config or app.config settings.

Cache settings in web.config (XML):

I recommend creating 3 caching profiles in the web.config. I created a profile called “Short” which is a 5 minute duration. Because this is a default setting, any .FromCache() commands will use the “Short” profile unless another is specified.

<configSections> <section name="cacheManager" type="CodeSmith.Data.Caching.CacheManagerSection, CodeSmith.Data" /> </configSections> <cacheManager defaultProvider="HttpCacheProvider" defaultProfile="Short"> <profiles> <add name="Brief" description="Brief cache" duration="0:0:10" /> <add name="Short" description="Short cache" duration="0:5:0" /> <add name="Long" description="Long cache" duration="1:0:0" /> </profiles> <providers> <add name="HttpCacheProvider" description="HttpCacheProvider" type="CodeSmith.Data.Caching.HttpCacheProvider, CodeSmith.Data" /> </providers> </cacheManager>

The “Short” profile will cache items for 5 minutes. This is a good choice for most scenarios. There is also a profile called “Long” which will cache for 1 hour, best suited for reference data which rarely changes. There is also a special-case profile called “Brief” which is useful in scenarios where a single data set is requested repeatedly in a single page load.

CacheProfile class (C#):

You may want to create a CacheProfile.cs class to standardize your references. Here is an example that can be customized to your needs.

/// <summary>

/// Cache duration names correspond to caching profiles in the web.config or app.config

/// </summary>

public static class CacheProfile

{

/// <summary>

/// 10 second cache duration.

/// </summary>

public const string Brief = "Brief";

/// <summary>

/// 5 minute cache duration.

/// </summary>

public const string Short = "Short";

/// <summary>

/// 1 hour cache duration.

/// </summary>

public const string Long = "Long";

}

CacheProfile class usage (C#):

IEnumerable<User> users = db.Users.FromCache(CacheProfile.Brief); //Uses "Brief" profile IEnumerable<User> users = db.Users.FromCache(); //Assumes "Short" profile IEnumerable<User> users = db.Users.FromCache(CacheProfile.Long); //Uses "Long" profile

PLINQO’s caching system has additional features like cache groups, explicit cache invalidation, and a cache manager for non-LINQ objects. See http://www.codesmithtools.com/product/frameworks/plinqo/tour/caching for full documentation.

PLINQO Future Queries

The “futures” capability of PLINQO allows for intelligent batching of queries to reduce round-trips to SQL Server. This is particularly helpful for MVC because multiple objects are often sent to the same view. Because the view is executed after the controller action, often all of the objects passed can be batched in a single SQL query.

Futures usage in controller action (C#):

public ActionResult EditUser(int userId) {

MyDataContext db = new MyDataContext();

User user = db.Users.Where(u => u.UserID == userId).Future(); //Deferred

ViewBag.RoleOptions = new SelectList(db.Roles.Future(), "RoleID", "RoleName", user.UserID); //Deferred

ViewBag.StateOptions = new SelectList(db.States.Future(), "StateID", "StateName", user.StateID); //Deferred

//All Future queries will be executed when the view calls any of the objects

return View(user);

}

Instead of executing these 3 queries independently, they will be batched into a single SQL query. The Future() extension makes use of LINQ-to-SQL’s deferred execution feature. In other words, LINQ does not actually execute any of these queries until one of the collections is enumerated.

Enumeration occurs when:

- Any code attempts to access the contents of the collection, usually by looping through the collection (for each item in collection), or…

- The objects in the collection are counted. For example: RoleOptions.Count(), or…

- The collection is converted to another type through .ToList() or .ToArray().

Caveats of future queries:

- If you want to use .Future() then you should not use .ToList() or .ToArray(). This causes immediate enumeration of the query. Frankly, there is rarely a need to immediately convert to a list or an array. The .Future() return type of IEnumerable<T> works perfectly well for most scenarios.

- Razor views tend to throw errors if futures were used with an anonymous type.

If you want to use futures, make sure that your results are returned into a known data type. In other words, the following will throw a runtime error when the Razor view is executed.

Anonymous type which causes runtime error (C#):

//Fails at runtime when the view enumerates the collection

ViewBag.UserOptions = new SelectList(db.Users.Select(new { ID = u.UserID, FirstName = u.FirstName }).Future(), "UserID", "FirstName");

However, the same Future query will work fine if a type is specified.

Future query with known type (C#):

//Works fine at runtime because UserViewModel is a known type

ViewBag.UserOptions = new SelectList(db.Users.Select(new UserViewModel() { ID = u.UserID, FirstName = u.FirstName }).Future(), "UserID", "FirstName");

Another nice feature is that PLINQO allows caching and futures together. There are extension methods which combine both features:

Futures with caching sample (C#):

//Returns one user, or null if not found

User someUser = db.Users.Where(u => u.Username = "administrator").FutureCacheFirstOrDefault();

//Returns a collection of users

IEnumerable<User> adminUsers = db.Users.Where(u => u.Username.Contains("admin")).FutureCache();

The term “FromCache” is simply changed to “FutureCache.” These extension methods support the same cache duration settings as FromCache().

For more details on PLINQO Future Queries, see http://www.codesmithtools.com/product/frameworks/plinqo/tour/futurequeries

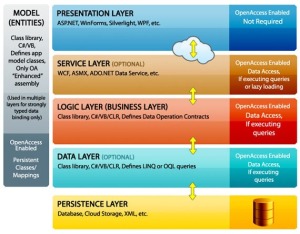

Best Practices for Query Reuse

Often, there are a lot of common queries that are repeated throughout an application. Ideally, your application should have a data service layer or business logic layer to house common queries for easy reuse. However, if that is not currently in place, the simplest solution is to add methods to each controller as appropriate. This would still improve code reuse with minimal programming changes.

Sample redundant controller code (C#):

public ActionResult FirstPage() {

ViewBag.CustomerList = new SelectList(db.Customers.OrderBy(c => c.CustomerID), "CustomerID", "CustomerName");

return View();

}

public ActionResult SecondPage() {

ViewBag.CustomerList = new SelectList(db.Customers.OrderBy(c => c.CustomerID), "CustomerID", "CustomerName");

return View();

}

Suggested replacement code (C#):

public IEnumerable<SelectListItem> GetCustomerList()

{

return (from c in db.Customers orderby c.CustomerName

select new SelectListItem() { Value = c.CustomerID.ToString(), Text = c.CustomerName })

.FutureCache(CacheProfile.Long);

}

public ActionResult FirstPage() {

ViewBag.CustomerList = new SelectList(GetCustomerList(), "Value", "Text");

return View();

}

public ActionResult SecondPage() {

ViewBag.CustomerList = new SelectList(GetCustomerList(), "Value", "Text");

return View();

}

This reduces the amount of redundant copy-paste query code while also implementing Futures and Caching. Because the return type is IEnumerable<SelectListItem> it avoids the anonymous type issues described above. It can also handle lists where a selected value must be specified.

Sample selected value in a SelectList (C#):

public IEnumerable<SelectListItem> GetCountryList()

{

return (from c in db.Countries orderby c.CountryName

select new SelectListItem() { Value = c.CountryID.ToString(), Text = c.CountryName })

.FutureCache(CacheProfile.Long);

}

public ActionResult EditUser(int userId)

{

User user = db.Users.GetByKey(userId);

ViewBag.CountryList = new SelectList(GetCountryList(), "Value", "Text", user.CountryID);

return View(user);

}

On a similar note, controllers often reuse the same relationships is multiple actions. Because of this, it is usually beneficial to create a private function to specify the common DataLoadOptions. This is preferably done in a business layer, but the controller is acceptable in a pinch.

MVC Controller DataLoadOptions sample (C#):

private DataLoadOptions EagerLoadUserRelationships()

{

DataLoadOptions options = new DataLoadOptions();

options.LoadWith<User>(u => u.Country);

options.LoadWith<User>(u => u.RoleList);

options.LoadWith<Role>(r => r.RoleType);

return options;

}

public ActionResult Index() {

MyDataContext db = new MyDataContext();

db.LoadOptions = EagerLoadUserRelationships();

Users userList = db.Users.Where(...).Future();

}

Other Performance Improvements

PLINQO has a number of other performance improvements including:

- Bulk updates (update many records with a single command).

- Bulk deletion (delete many records with a single command).

- Stored procedures with multiple result sets.

- Batch queries.

These features are documented here: http://www.codesmithtools.com/product/frameworks/plinqo/tour/performance

I hope you found this useful. Happy coding!